Technical Articles, Integration

Beating torch.compile: 12× faster compilation time at Toongether.ai

Feb 20, 2025

Nils Fleischmann

ML Research Engineer

John Rachwan

Cofounder & CTO

Bertrand Charpentier

Cofounder, President & Chief Scientist

Toongether is a B2C app for web comics asset generation, bringing illustrations to life with the help of AI. If you join their Discord, you'll find a passionate community of over 500 passionate comics fans (myself included!). After our initial collaboration with Scenario, the Toongether team was curious. Could Pruna AI’s optimization stack bring even more performance gains compared to torch.compile? Time to put it to the test.

Character generation and post-processing

Toongether’s core pipeline focuses on batch character generation and post-processing, including background removal, refinement, and upscaling.

End to end, this process took approximately 15 seconds. While we can’t disclose the exact breakdown of time per step due to competitive confidentiality, the Toongether team was actively looking for ways to optimize it.

They were using torch.compile but faced two key limitations:

The average efficiency gain was only around 5%.

The initial compilation warm-up time was over 10 minutes, making it impractical for their use case.

This led them to explore Pruna AI, primarily for two reasons:

Productivity: They wanted to be more efficient and needed a way to smash a pipeline, save it to disk, and deploy new workers without waiting for models to recompile.

Inference speed-up: Faster inference directly translates to lower costs.

In some cases, teams look into optimization when they are close to GPU saturation, preventing them from upscaling workloads. That wasn’t a blocker for Toongether yet. Their focus was on ensuring the best possible user experience.

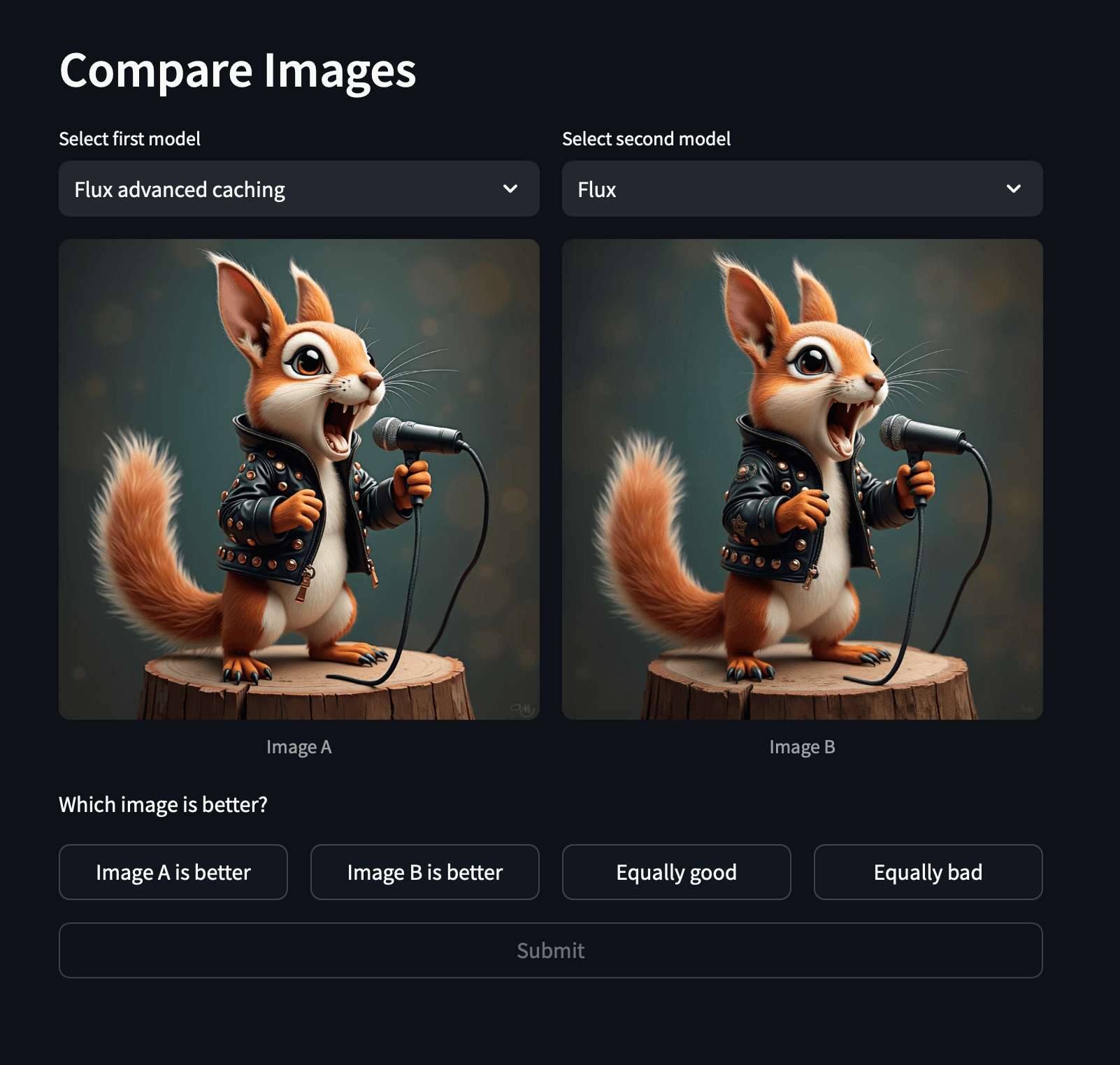

Comparing SD-XL: torch.compile vs Pruna

To speed up the process, we directly replicated their pipeline under exact production conditions and ran a benchmark for them. This way, integrating the optimizations would be as simple as adding a few code snippets in their notebook.

They provided us with a notebook (with secrets removed), and these were the key constraints:

The U-Net is shared between the main pipeline and the refiner pipeline.

IP-adapters are loaded and unloaded dynamically depending on the workflow, while the same U-Net is reused across workflows.

It runs on L40S GPUs.

One critical requirement was transparency: they wanted a clear understanding of what was happening under the hood. No black box. That aligned well with Pruna’s approach, where the optimization agent provides full access to hyperparameter configurations, detailing exactly which methods were applied and how.

In just a few hours, we were done. The results: 12× faster compilation time and +40% inference speed-up.

Here’s the full comparison table:

stable-fast maintenance, OOM issues & evaluation

It’s worth mentioning that Toongether.ai was already familiar with stable-fast. However, the repository is no longer maintained: it still relies on Python 2.1, and the last update was nearly a year ago. One key advantage of using Pruna is that it ensures compatibility within our package. This also means guaranteed compatibility with stable-fast, avoiding potential issues down the line.

They also needed a solution to resolve OOM issues while maintaining lossless memory optimization. While Pruna supports quantization for diffusion models (see quanto), reducing the model’s size to 8-bit would be nearly lossless. The expected memory reduction from 8-bit quantization is roughly 2×, but quantization itself isn’t a lossless approach, making it unsuitable for their specific constraint.

Lastly, as highlighted in previous case studies with Scenario and Photoroom, evaluation remains an unsolved challenge. Toongether is still early in their journey and doesn’t yet have a consistent evaluation framework. This is partly due to the rapid iteration of their models and application concept. However, they are fully aware of the importance of visual evaluation. Currently, their AI team manually reviews results, a process that takes time and effort. That’s another area where Pruna could help. Our Evaluation Agent automates part of this workflow, allowing their team to focus on improving models and delivering new features to the comics’ community.

Bottom-line: Toongether cut latency by 40%, eliminated the 10-minute warm-up bottleneck, and ensured long-term maintainability. All in just two days. If you’re looking to optimize evaluation in your own setup, reach out! We’ll help you get it done just as fast.

・

Feb 20, 2025

Subscribe to Pruna's Newsletter