Technical Articles, Integration

Flux Schnell 3x Faster, 3x Cheaper? Mission Accomplished for Each AI

Nov 8, 2024

Quentin Sinig

Go-to-Market Lead

Bertrand Charpentier

Cofounder, President & Chief Scientist

Each AI x Pruna AI: Together, To Make AI More Accessible

Pruna AI is on a mission to make AI more efficient and sustainable by offering a cutting-edge model optimization engine. Each AI, on the other hand, designs powerful workflows by integrating the best vision-based AI models available from top providers like Minimax, Hailuo AI, Elevenlabs, Runway, and more. But what if the base models could actually be optimized versions?!

Each AI recently reached out to Pruna AI with a straightforward goal: enhance performance and reduce costs. In a matter of days, we went from initial talks to fully deploying an impactful use case, marking the start of an exciting partnership! Together, as co-members of the NVIDIA Inception Program, we shared a strong foundation to drive efficiency further in AI workflows.

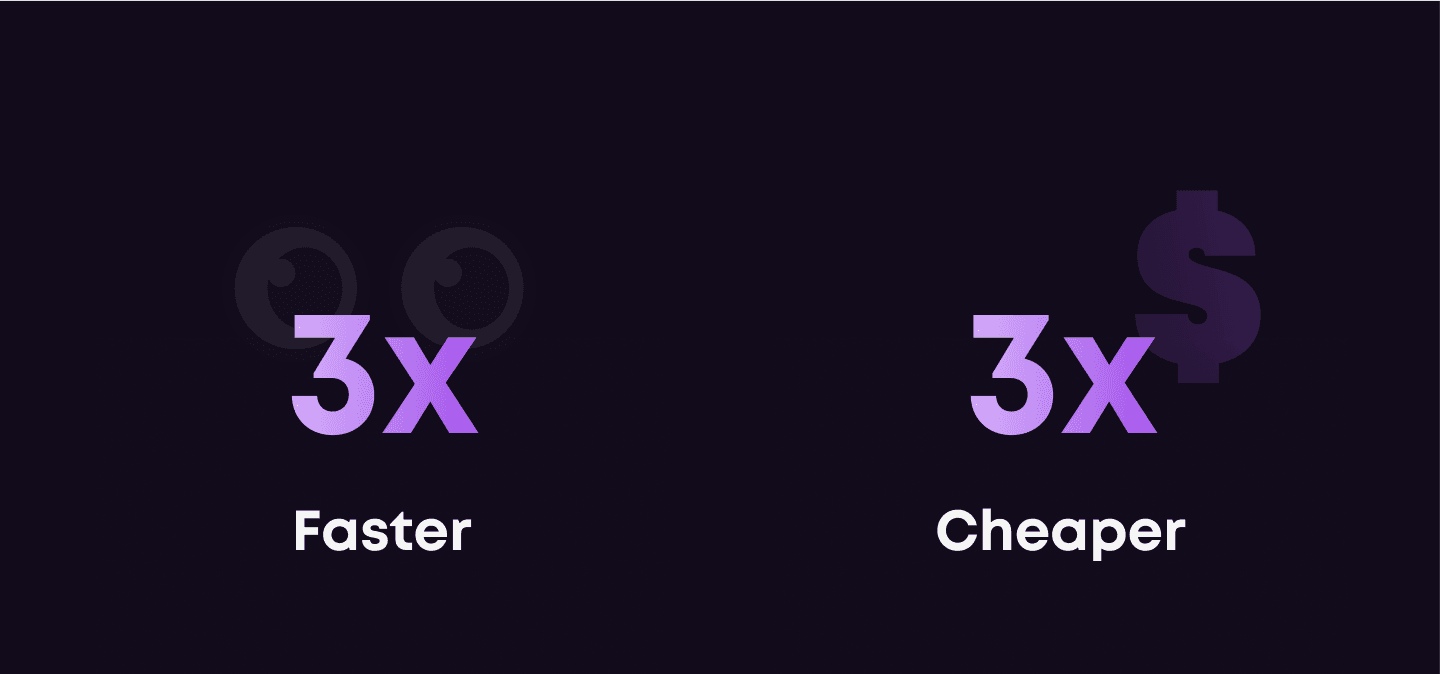

Flux Schnell: 3x Faster, 3x Cheaper? Mission Accomplished.

At Pruna AI, we had already released both 4-bit and 8-bit versions of Flux Schnell and knew its capabilities well. In our tests, running on an A100 with a 10124x1024 pixels asset, we had already observed a +26% speed improvement, achieving generation times of 1.7 seconds. But we went further in this deployment, with even better results!

Cheaper? Check. The cost per use went down significantly. Originally deployed on an A100-40 instance, Flux Schnell now runs smoothly on an A10G instance.

Faster? You Bet! Processing time dropped from 10 seconds to just 3 seconds—a 3x speedup. While a small portion of time (due to Kubernetes Pod startup) remains beyond compression’s reach, the results were impressive regardless.

Quality Test Drive? Tested and Proven. To ensure optimized performance, Pruna’s Flux Playground tool verified metrics for accuracy and consistency. Each AI then routed 10% of their production traffic through the optimized model to test for any potential issues. With 10 requests per second, the model maintained high performance without a hitch. Confident in the results, they’re now moving to make Pruna’s optimized model the default!

Speed & Cost Efficiency: Make or Break for AI-Native Startups

In a world where the trend is to build AI products with VC funding, reaching profitability quickly is essential. It’s all too easy to take the “lazy” path of relying solely on funding. Now is the time to be scrappy, and do more with less. AI-native startups like Each AI face a tough reality: funding can run out faster than their models become profitable. That’s why performance and cost efficiency are essential, not optional. Faster processing enhances user experience and drives conversion to paid plans, boosting revenue. At the same time, controlling compute costs is critical to preserving margins as the platform scales. In short, speed and cost control are make-or-break factors in today’s competitive AI landscape.

From Zero to Production in Days

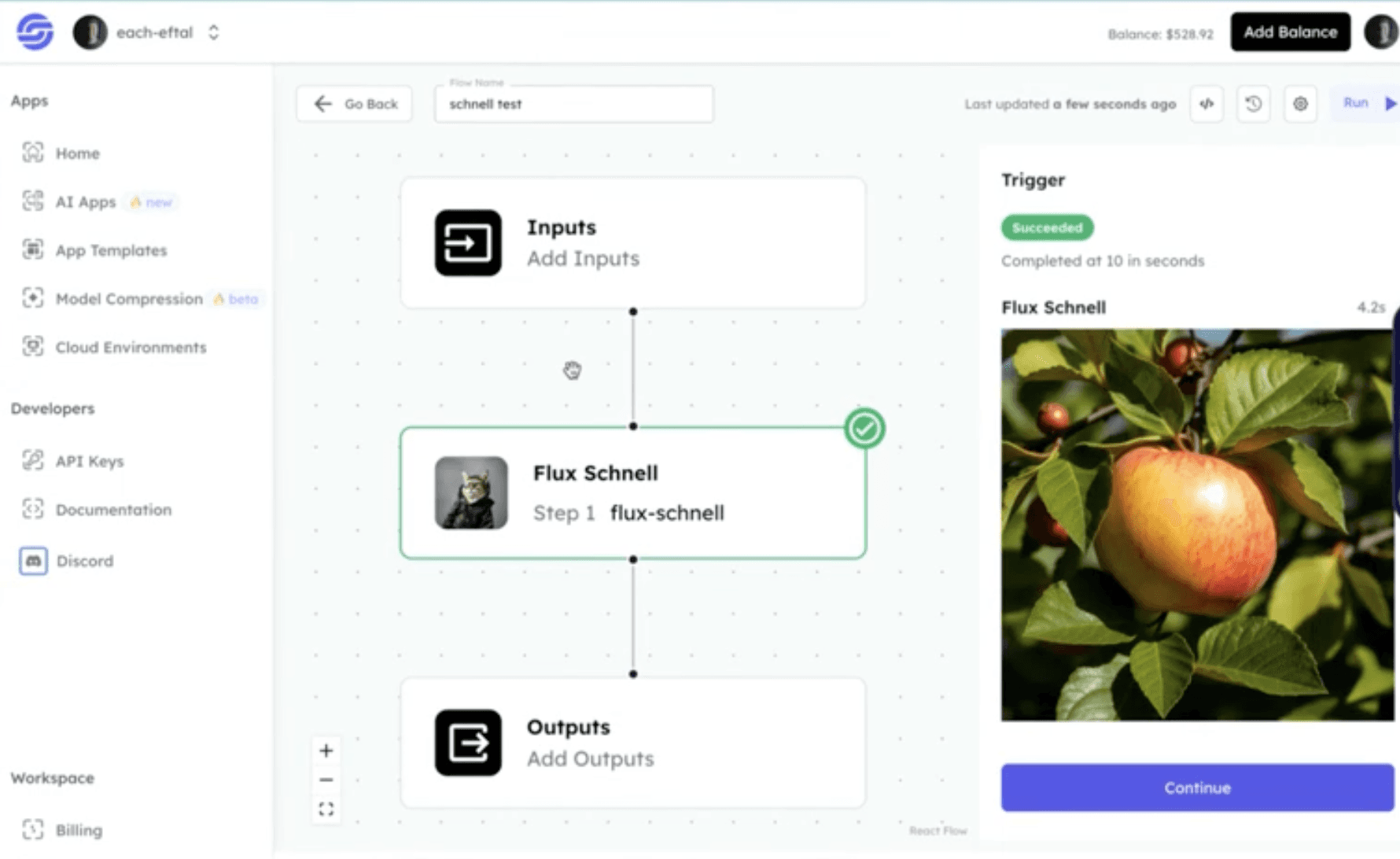

We all know there’s nothing like real details to see how a project actually gets done, right? Here’s the exact workflow Eftal (Each AI’s CEO) and his team followed, so you can see it wasn’t magic—just solid engineering.

Development Environment: They loaded everything up on a Jupyter Notebook (psst, check this one!) for development.

Setup: They installed the required dependencies (transformers, python packages…) and downloaded files from Hugging Face repository.

Implementation: They started with sample code snippets from Pruna’s documentation, incorporating custom schedulers and tokenizers, and upgraded to PyTorch 2.4.1.

Deployment: Final deployment was managed on cloud serverless services.

This efficient approach got the model up and running with minimal friction, proving that even complex integrations can be quick with the right resources and guidance.

More Optimized Models on the Horizon!

With over 90 models on Each AI’s platform, we’re only getting started. Our teams are already exploring Stable Diffusion and LLMs as the next optimization targets. Mochi 1 is also on our radar; it currently requires 4 H100s to run, but given our recent successes—like reducing DRBX’s requirements from 4xA100s to 1xA100—Mochi 1 seems like a fitting challenge.

What’s next for you? Try Each AI’s free tier to experience the difference firsthand, and let us know what you think!

・

Nov 8, 2024

Subscribe to Pruna's Newsletter